SignOmni — American Sign Language Search and Generative Models to Support Early Learners

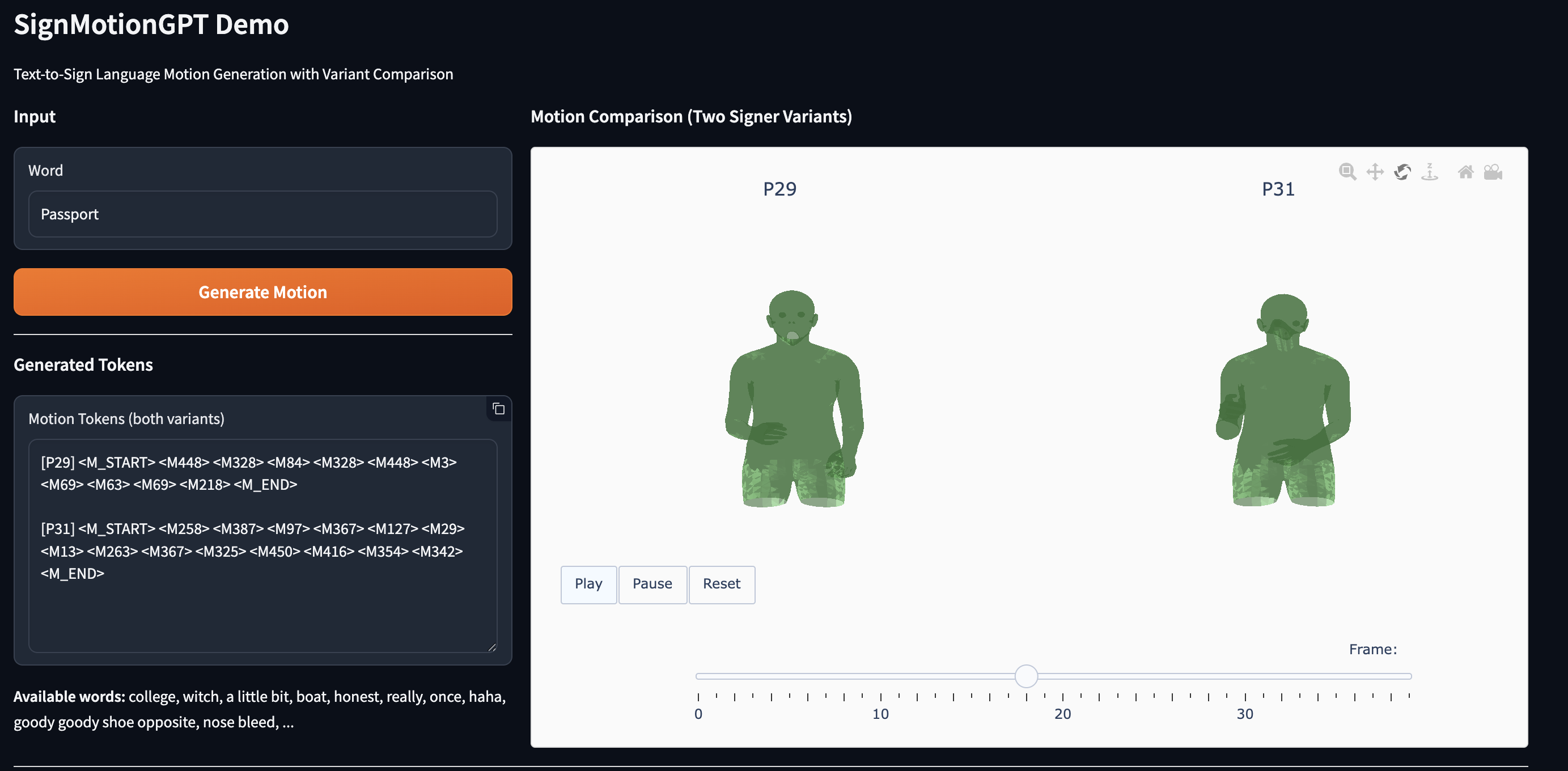

Build SignMotionGPT by pre-training an LLM for 3D ASL generation, along with quantitative and qualitative evaluation.

We initiated the Research Acceleration Program to support the rapid growth of early-stage AI researchers, emphasizing human-centered multimodal intelligence—spanning generative AI, multimodal large models, and LLM-based agentic systems for applications in education, sign language communication, and digital humans.

A structured mentoring program designed to accelerate research productivity and build strong technical and academic foundations for students. The program offers guidance on:

Projects will be tailored to each student’s background, research interests, and starting level, with appropriately calibrated difficulty.

If you’re interested, please feel free to send me your resume. Spots are limited and will be filled on a first-come, first-served basis.

The following projects will be updated periodically. The demos are fully interactive, but because they are hosted on free-tier Hugging Face resources, they may enter a sleeping state when idle. As a result, the first run may take some time to wake up.

SignOmni — American Sign Language Search and Generative Models to Support Early Learners

Build SignMotionGPT by pre-training an LLM for 3D ASL generation, along with quantitative and qualitative evaluation.

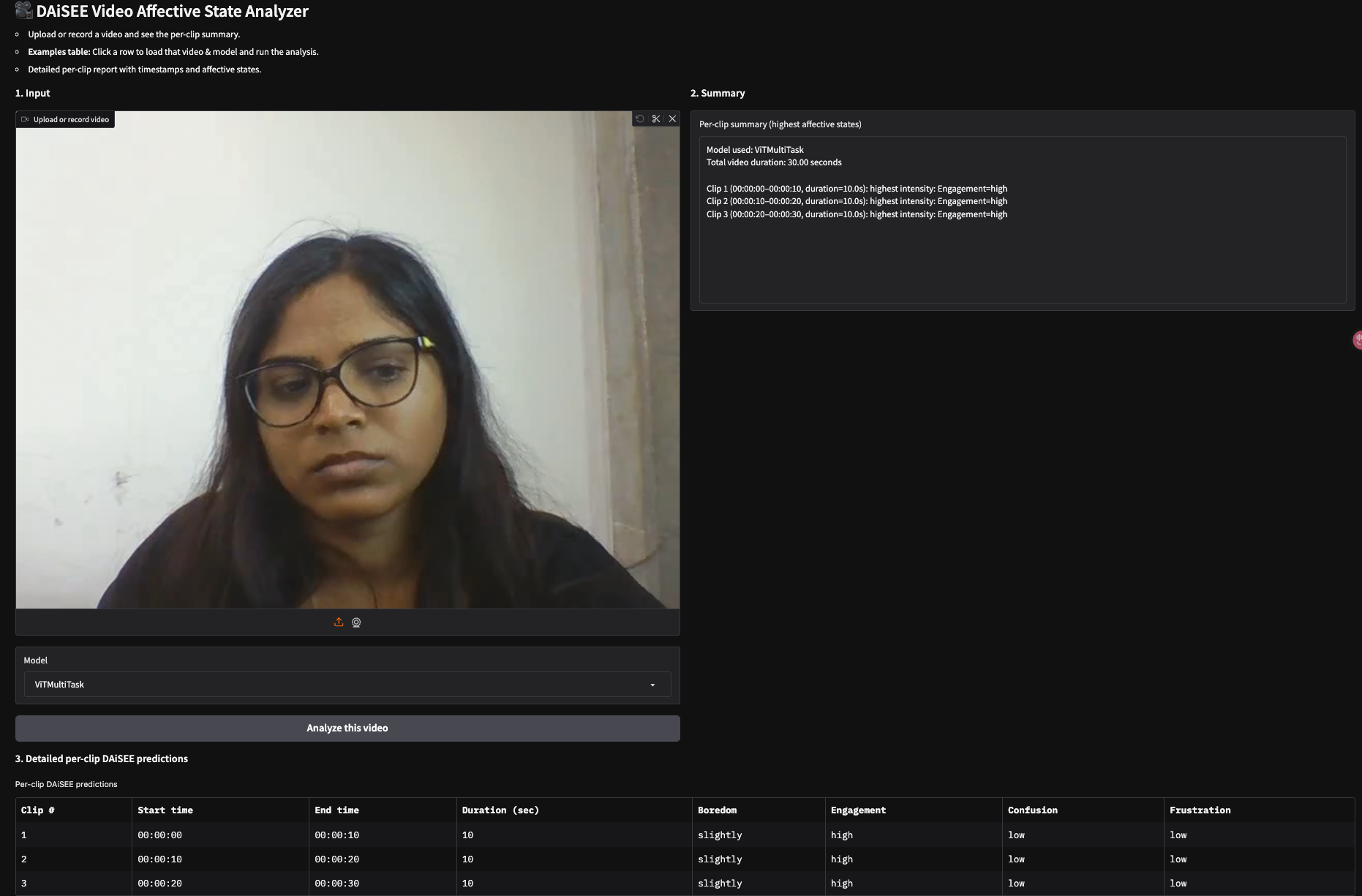

SCOPE – Student Cognitive Observation, Perception, and Explanation

Analyzing students’ cognitive states : engagement, confusion, frustration, and boredom—along with the intensity level of each state in online studies using image-based data.

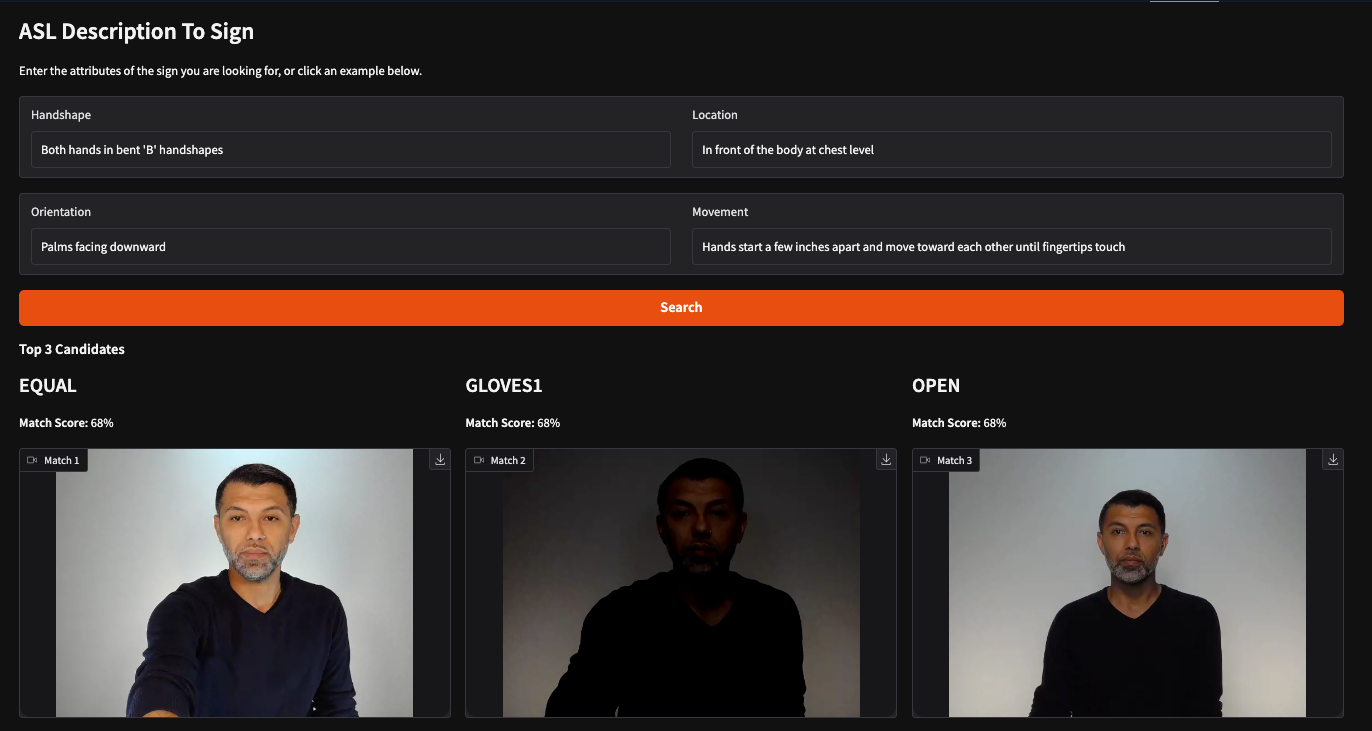

SignOmni — American Sign Language Search and Generative Models to Support Early Learners

Building a RAG-based system that maps sign language descriptions to signs, using key linguistic features such as handshape, palm orientation, movement, and location.

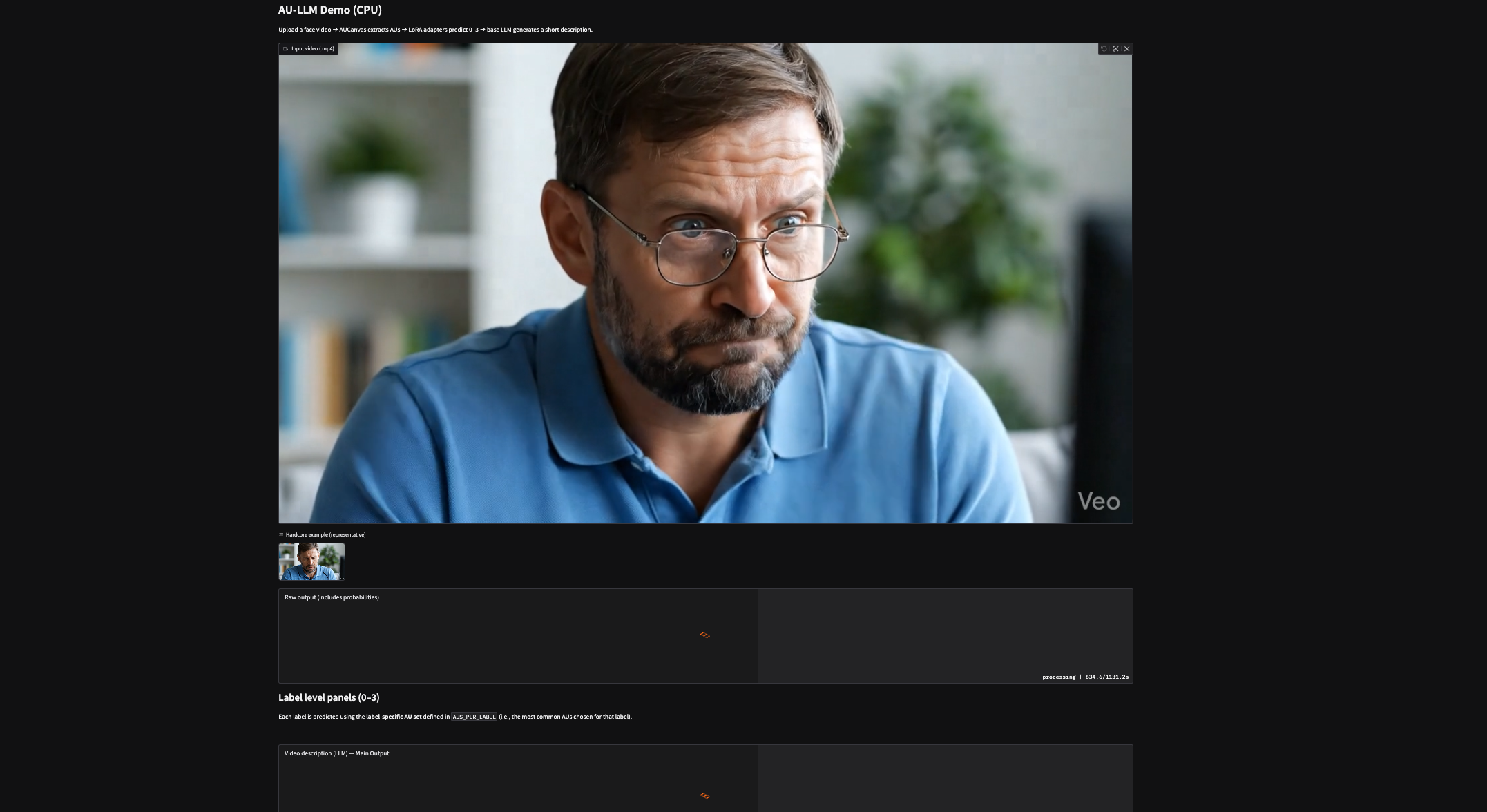

SCOPE – Student Cognitive Observation, Perception, and Explanation

Branch Goal: Analyze students’ cognitive states—engagement, confusion, frustration, and boredom—by leveraging facial Action Units (AUs) and fine-tuned large language models for deeper interpretability.

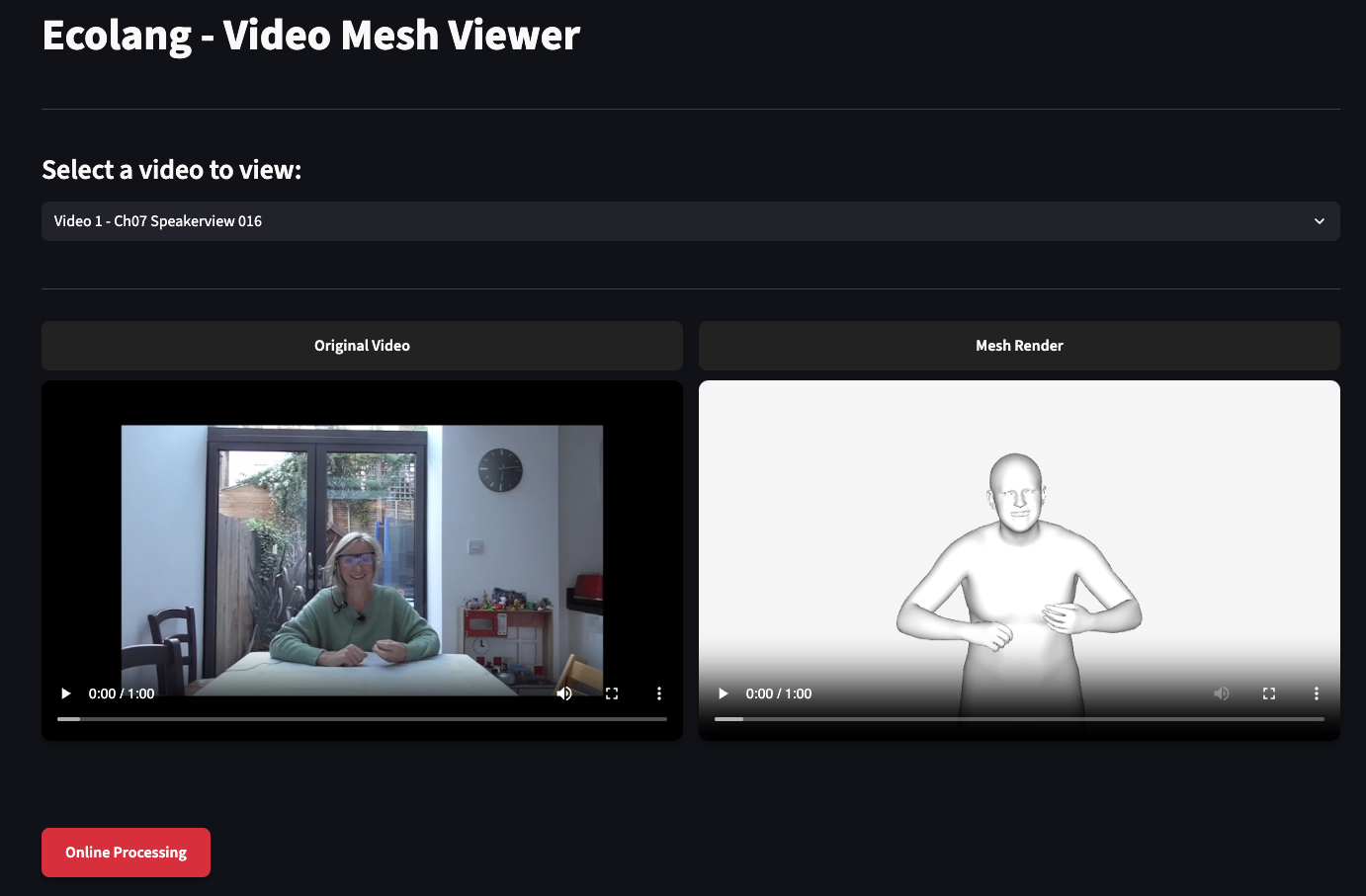

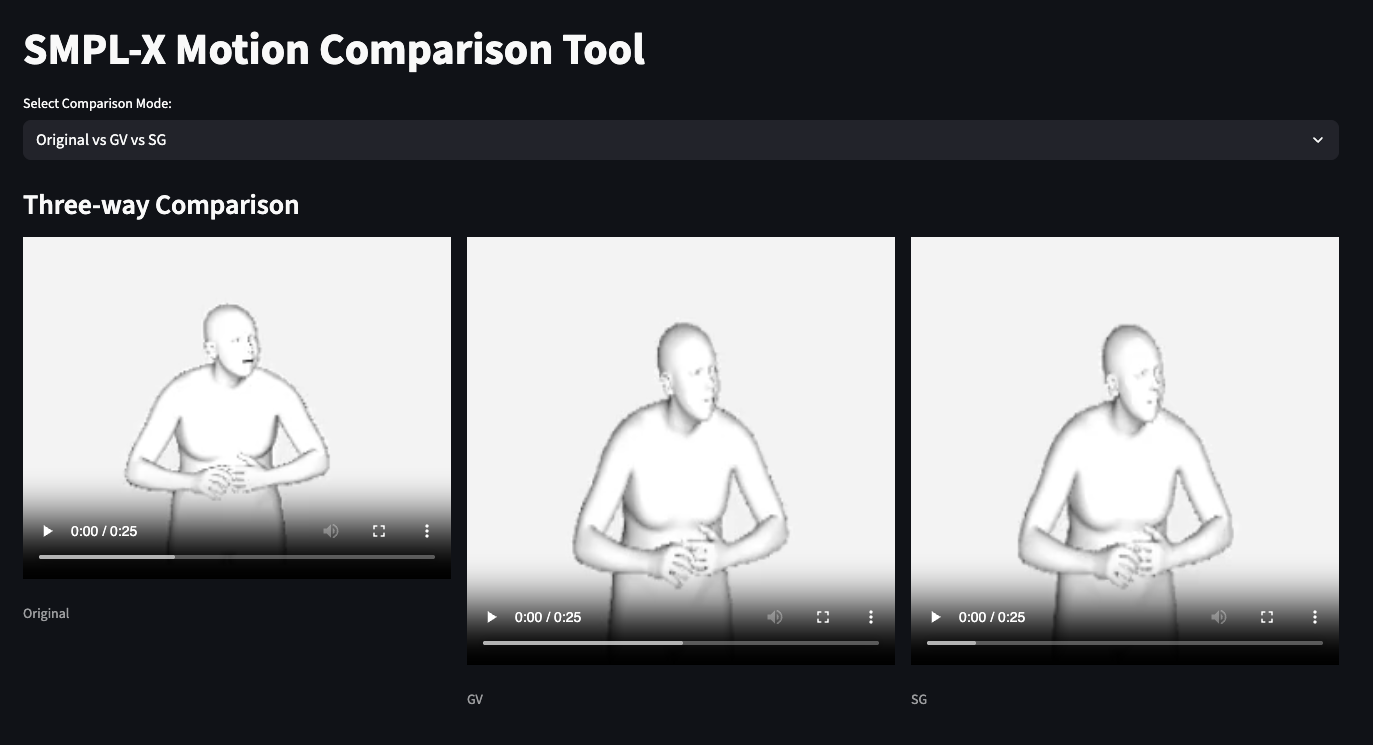

StrategyGen: Generating Adult–Child Interaction Strategies for Early Education

Reconstruct 3D body meshes from 2D interaction videos and train an audio-driven generative model that generates matching 3D body motion.

StrategyGen: Generating Adult–Child Interaction Strategies for Early Education

Exploring multiple denoising methods to reduce jitter in reconstructed 3D meshes and develop an automated denoising pipeline.

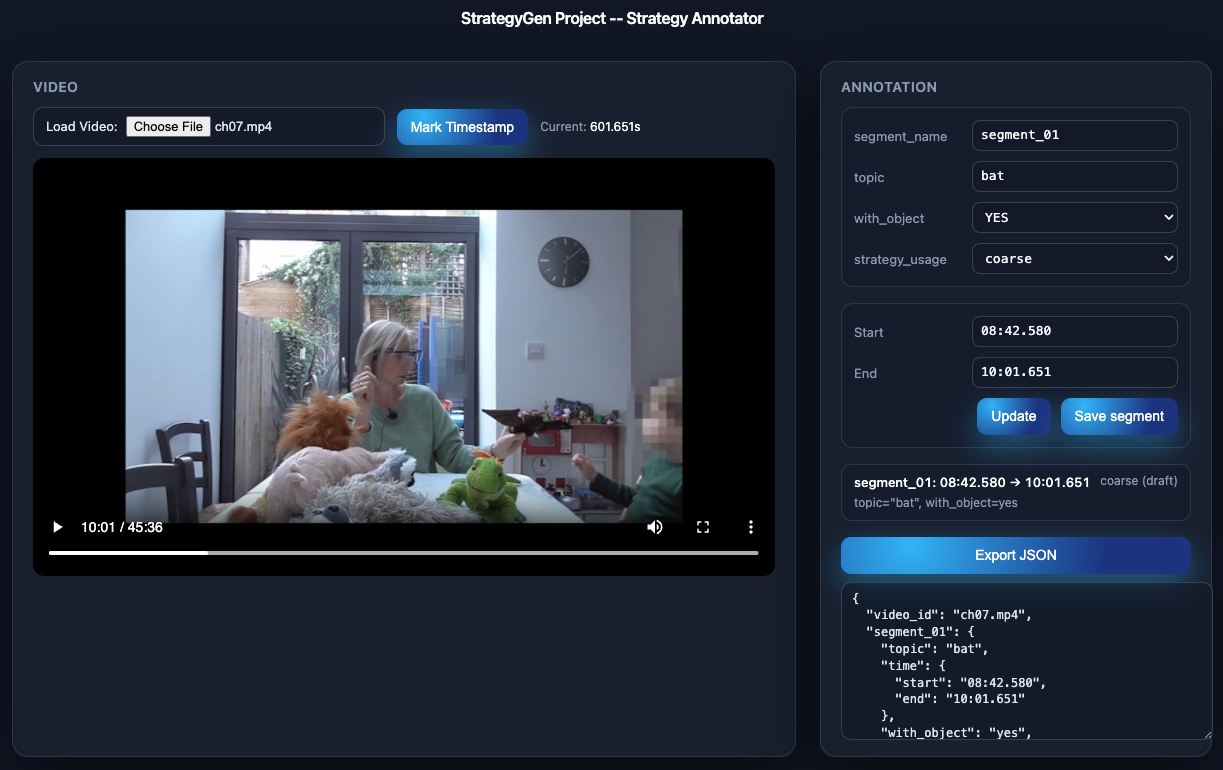

StrategyGen: Generating Adult–Child Interaction Strategies for Early Education

Given an interaction video, annotate adult strategies, yield their timestamps, and further develop an end-to-end detection pipeline.